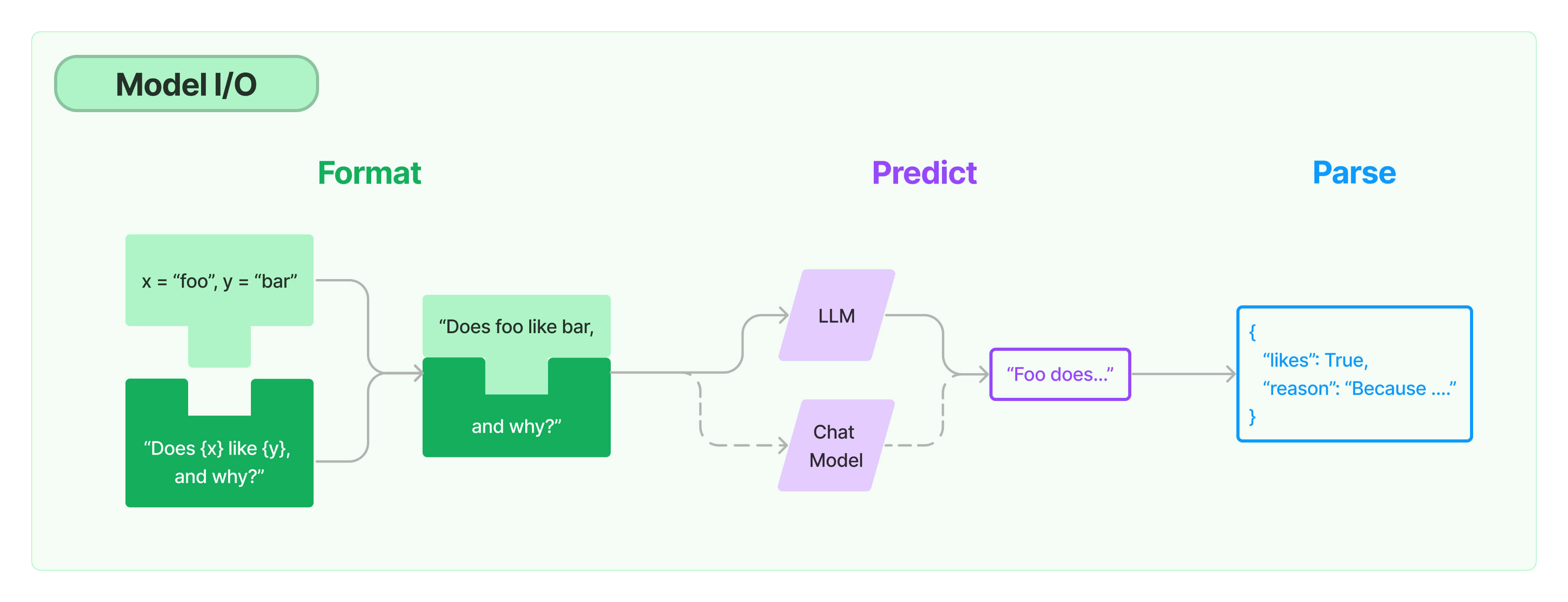

Model I/O

The core element of any language model application is...the model. LangChain gives you the building blocks to interface with any language model.

Quickstart

The below quickstart will cover the basics of using LangChain's Model I/O components. It will introduce the two different types of models - LLMs and Chat Models. It will then cover how to use Prompt Templates to format the inputs to these models, and how to use Output Parsers to work with the outputs.

Language models in LangChain come in two flavors:

ChatModels

Chat models are often backed by LLMs but tuned specifically for having conversations. Crucially, their provider APIs use a different interface than pure text completion models. Instead of a single string, they take a list of chat messages as input and they return an AI message as output. See the section below for more details on what exactly a message consists of. GPT-4 and Anthropic's Claude-2 are both implemented as chat models.

LLMs

LLMs in LangChain refer to pure text completion models. The APIs they wrap take a string prompt as input and output a string completion. OpenAI's GPT-3 is implemented as an LLM.

These two API types have different input and output schemas.

Additionally, not all models are the same. Different models have different prompting strategies that work best for them. For example, Anthropic's models work best with XML while OpenAI's work best with JSON. You should keep this in mind when designing your apps.

For this getting started guide, we will use chat models and will provide a few options: using an API like Anthropic or OpenAI, or using a local open source model via Ollama.

- OpenAI

- Local (using Ollama)

- Anthropic (chat model only)

- Cohere (chat model only)

First we'll need to install their partner package:

pip install langchain-openai

Accessing the API requires an API key, which you can get by creating an account and heading here. Once we have a key we'll want to set it as an environment variable by running:

export OPENAI_API_KEY="..."

We can then initialize the model:

from langchain_openai import ChatOpenAI

from langchain_openai import OpenAI

llm = OpenAI()

chat_model = ChatOpenAI(model="gpt-3.5-turbo-0125")

API Reference:

If you'd prefer not to set an environment variable you can pass the key in directly via the api_key named parameter when initiating the OpenAI LLM class:

from langchain_openai import ChatOpenAI

llm = ChatOpenAI(api_key="...")

API Reference:

Both llm and chat_model are objects that represent configuration for a particular model.

You can initialize them with parameters like temperature and others, and pass them around.

The main difference between them is their input and output schemas.

The LLM objects take string as input and output string.

The ChatModel objects take a list of messages as input and output a message.

We can see the difference between an LLM and a ChatModel when we invoke it.

from langchain_core.messages import HumanMessage

text = "What would be a good company name for a company that makes colorful socks?"

messages = [HumanMessage(content=text)]

llm.invoke(text)

# >> Feetful of Fun

chat_model.invoke(messages)

# >> AIMessage(content="Socks O'Color")

API Reference:

The LLM returns a string, while the ChatModel returns a message.

Ollama allows you to run open-source large language models, such as Llama 2, locally.

First, follow these instructions to set up and run a local Ollama instance:

- Download

- Fetch a model via

ollama pull llama2

Then, make sure the Ollama server is running. After that, you can do:

from langchain_community.llms import Ollama

from langchain_community.chat_models import ChatOllama

llm = Ollama(model="llama2")

chat_model = ChatOllama()

API Reference:

Both llm and chat_model are objects that represent configuration for a particular model.

You can initialize them with parameters like temperature and others, and pass them around.

The main difference between them is their input and output schemas.

The LLM objects take string as input and output string.

The ChatModel objects take a list of messages as input and output a message.

We can see the difference between an LLM and a ChatModel when we invoke it.

from langchain_core.messages import HumanMessage

text = "What would be a good company name for a company that makes colorful socks?"

messages = [HumanMessage(content=text)]

llm.invoke(text)

# >> Feetful of Fun

chat_model.invoke(messages)

# >> AIMessage(content="Socks O'Color")

API Reference:

The LLM returns a string, while the ChatModel returns a message.

First we'll need to import the LangChain x Anthropic package.

pip install langchain-anthropic

Accessing the API requires an API key, which you can get by creating an account here. Once we have a key we'll want to set it as an environment variable by running:

export ANTHROPIC_API_KEY="..."

We can then initialize the model:

from langchain_anthropic import ChatAnthropic

chat_model = ChatAnthropic(model="claude-3-sonnet-20240229", temperature=0.2, max_tokens=1024)

API Reference:

If you'd prefer not to set an environment variable you can pass the key in directly via the api_key named parameter when initiating the Anthropic Chat Model class:

chat_model = ChatAnthropic(api_key="...")

First we'll need to install their partner package:

pip install langchain-cohere

Accessing the API requires an API key, which you can get by creating an account and heading here. Once we have a key we'll want to set it as an environment variable by running:

export COHERE_API_KEY="..."

We can then initialize the model:

from langchain_cohere import ChatCohere

chat_model = ChatCohere()

API Reference:

If you'd prefer not to set an environment variable you can pass the key in directly via the cohere_api_key named parameter when initiating the Cohere LLM class:

from langchain_cohere import ChatCohere

chat_model = ChatCohere(cohere_api_key="...")

API Reference:

Prompt Templates

Most LLM applications do not pass user input directly into an LLM. Usually they will add the user input to a larger piece of text, called a prompt template, that provides additional context on the specific task at hand.

In the previous example, the text we passed to the model contained instructions to generate a company name. For our application, it would be great if the user only had to provide the description of a company/product without worrying about giving the model instructions.

PromptTemplates help with exactly this! They bundle up all the logic for going from user input into a fully formatted prompt. This can start off very simple - for example, a prompt to produce the above string would just be:

from langchain_core.prompts import PromptTemplate

prompt = PromptTemplate.from_template("What is a good name for a company that makes {product}?")

prompt.format(product="colorful socks")

API Reference:

What is a good name for a company that makes colorful socks?

There are several advantages of using these over raw string formatting. You can "partial" out variables - e.g. you can format only some of the variables at a time. You can compose them together, easily combining different templates into a single prompt. For explanations of these functionalities, see the section on prompts for more detail.

PromptTemplates can also be used to produce a list of messages.

In this case, the prompt not only contains information about the content, but also each message (its role, its position in the list, etc.).

Here, what happens most often is a ChatPromptTemplate is a list of ChatMessageTemplates.

Each ChatMessageTemplate contains instructions for how to format that ChatMessage - its role, and then also its content.

Let's take a look at this below:

from langchain_core.prompts.chat import ChatPromptTemplate

template = "You are a helpful assistant that translates {input_language} to {output_language}."

human_template = "{text}"

chat_prompt = ChatPromptTemplate.from_messages([

("system", template),

("human", human_template),

])

chat_prompt.format_messages(input_language="English", output_language="French", text="I love programming.")

API Reference:

[

SystemMessage(content="You are a helpful assistant that translates English to French.", additional_kwargs={}),

HumanMessage(content="I love programming.")

]

ChatPromptTemplates can also be constructed in other ways - see the section on prompts for more detail.

Output parsers

OutputParsers convert the raw output of a language model into a format that can be used downstream.

There are a few main types of OutputParsers, including:

- Convert text from

LLMinto structured information (e.g. JSON) - Convert a

ChatMessageinto just a string - Convert the extra information returned from a call besides the message (like OpenAI function invocation) into a string.

For full information on this, see the section on output parsers.

In this getting started guide, we use a simple one that parses a list of comma separated values.

from langchain.output_parsers import CommaSeparatedListOutputParser

output_parser = CommaSeparatedListOutputParser()

output_parser.parse("hi, bye")

# >> ['hi', 'bye']

API Reference:

Composing with LCEL

We can now combine all these into one chain. This chain will take input variables, pass those to a prompt template to create a prompt, pass the prompt to a language model, and then pass the output through an (optional) output parser. This is a convenient way to bundle up a modular piece of logic. Let's see it in action!

template = "Generate a list of 5 {text}.\n\n{format_instructions}"

chat_prompt = ChatPromptTemplate.from_template(template)

chat_prompt = chat_prompt.partial(format_instructions=output_parser.get_format_instructions())

chain = chat_prompt | chat_model | output_parser

chain.invoke({"text": "colors"})

# >> ['red', 'blue', 'green', 'yellow', 'orange']

Note that we are using the | syntax to join these components together.

This | syntax is powered by the LangChain Expression Language (LCEL) and relies on the universal Runnable interface that all of these objects implement.

To learn more about LCEL, read the documentation here.

Conclusion

That's it for getting started with prompts, models, and output parsers! This just covered the surface of what there is to learn. For more information, check out:

- The prompts section for information on how to work with prompt templates

- The ChatModel section for more information on the ChatModel interface

- The LLM section for more information on the LLM interface

- The output parser section for information about the different types of output parsers.